You : Alexa, play Despacito on youtube.

Alexa : You need a paid Spotify account or an Amazon music account to listen to specific track.

Sucks doesn't it? Let's make our own music service. We'll call it ytskill.

This tutorial assumes some knowledge of python. We will be using python3.5

First, we need to install the Flask web framework via pip for our webserver.

pip install FlaskThe youtube-dl library that our script will need to get the audio from youtube.

pip install youtube-dlAnd finally the Alexa Skills SDK for python, that give us access to its features.

pip install ask-sdkNext, let's create our project folder in the home directory and name it ytskill. Inside, we'll create another folder named ytdl and a file name util.py which will contain our script to fetch music from youtube.

ytskill/

├─ ytdl/

│ ├─ util.py <---

import youtube_dl

def search_track(search_arg, audio_format=0):

track = ''

ydl_opts = {

'format': 'bestaudio/best',

'noplaylist': True,

}

with youtube_dl.YoutubeDL(ydl_opts) as yt:

info = yt.extract_info("ytsearch:{}".format(search_arg), download=False)

track_info = info['entries'][0]

track_audio_url = track_info['formats'][audio_format]['url']

track_info = {

'artist':track_info.get('artist') or track_info.get('channel'),

'title':track_info.get('title'),

'url':track_audio_url,

'image':track_info.get('thumbnail') or None

}

return track_info

def track_info(search_arg, audio_format=0):

ydl_opts = {

'format': 'bestaudio/best',

'noplaylist': True,

}

with youtube_dl.YoutubeDL(ydl_opts) as yt:

info = yt.extract_info("ytsearch:{}".format(search_arg), download=False)

track_info = info['entries'][0]

return track_info['thumbnail']As the name suggests, the search_track function searches for a given song on youtube and returns the first result. It then parses the result to find the audio url, video title and the thumbnail url. The data is returned in a dictionary.

Now let's build the Alexa utility. Create another folder in our project named alexa and within another file named data.py

ytskill/

├─ ytdl/

│ ├─ util.py

├─ alexa/

│ ├─ data.py <---

The data.py will store config, which we can change later, such as the audio format to get from youtube and what Alexa says after executing our commands.

# -*- coding: utf-8 -*-

import gettext

WELCOME_MSG = 'Welcome to Youtube Player! To listen to a song, say play songname'

NOW_PLAYING_MSG = "Now playing {}"

AUDIO_FORMAT = 3

SAMPLE_SONG_URL = 'https://rr7---sn-h50gpup0nuxaxjvh-hg0k.googlevideo.com/videoplayback?expire=1646346344&ei=COwgYu7gAtnZ1wb50YSQBg&ip=102.112.95.7&id=o-AMoKeZMkxrxkF3hJfMFTnvKyl9oVoH-arQjbSt2oxuRp&itag=140&source=youtube&requiressl=yes&mh=wZ&mm=31%2C29&mn=sn-h50gpup0nuxaxjvh-hg0k%2Csn-hc57enee&ms=au%2Crdu&mv=m&mvi=7&pl=18&initcwndbps=828750&vprv=1&mime=audio%2Fmp4&ns=vNqNPLs7rPymfEmuSYuFbmMG&gir=yes&clen=4019742&dur=248.337&lmt=1626232139310588&mt=1646324461&fvip=4&keepalive=yes&fexp=24001373%2C24007246&c=WEB&txp=5532434&n=TsJHJTpRTgYcJ74FiVFm&sparams=expire%2Cei%2Cip%2Cid%2Citag%2Csource%2Crequiressl%2Cvprv%2Cmime%2Cns%2Cgir%2Cclen%2Cdur%2Clmt&lsparams=mh%2Cmm%2Cmn%2Cms%2Cmv%2Cmvi%2Cpl%2Cinitcwndbps&lsig=AG3C_xAwRAIgbn0UxdNkbgf4Xv_LS0GQ0n9qbAFCCfEw-st4mjMof0QCIA3CDxwg8096eUY-4lwj_pnKlbleRod2edJfVJ9B6BOs&sig=AOq0QJ8wRQIhAMbrUc5rAODbd8OMBL36K25Y1EJHH4rInQ3eUGC8C99EAiBY01jHcMIot6rgBxF6lgXvv2iXcZiTgYXRGOqSYQKrYg=='

SAMPLE_SONG_TITLE = 'Bruno Mars Song'

STOP_MSG = "Bye"

DEFAULT_CARD_IMG = 'https://i.imgur.com/QZz8ZHM.png'

UNHANDLED_MSG = "Sorry, I could not understand what you've just said."

HELP_MSG = "You can play, stop, resume listening. How can I help you ?"Now let's write the script so that Alexa can stream (play, stop) the audio url returned from our search_track function above. Create another file in the alexa folder named util.py.

ytskill/

├─ ytdl/

│ ├─ util.py

├─ alexa/

│ ├─ data.py

│ ├─ util.py <----

from ask_sdk_model.interfaces.audioplayer import (

PlayDirective, PlayBehavior, AudioItem, Stream, AudioItemMetadata,

StopDirective, ClearQueueDirective, ClearBehavior)

from ask_sdk_model.ui import StandardCard, Image

from ask_sdk_model.interfaces import display

from .import data

def card_data(title=None, text=None, image=None):

# type : (str , str, str)-> Dict

return {

"title": title,

"text": text,

"large_image_url": image or data.DEFAULT_CARD_IMG,

"small_image_url": image or data.DEFAULT_CARD_IMG

}

def add_screen_background(card_data):

# type: (Dict) -> Optional[AudioItemMetadata]

if card_data:

metadata = AudioItemMetadata(

title=card_data["title"],

subtitle=card_data["text"],

art=display.Image(

content_description=card_data["title"],

sources=[

display.ImageInstance(

url=card_data["small_image_url"] or data.DEFAULT_CARD_IMG)

]

)

, background_image=display.Image(

content_description=card_data["title"],

sources=[

display.ImageInstance(

url=card_data["small_image_url"] or data.DEFAULT_CARD_IMG)

]

)

)

return metadata

else:

return None

def play(url, offset, text, card_data, response_builder):

"""Function to play audio.

Using the function to begin playing audio when:

- Play Audio Intent is invoked.

- Resuming audio when stopped / paused.

- Next / Previous commands issues.

https://developer.amazon.com/docs/custom-skills/audioplayer-interface-reference.html#play

REPLACE_ALL: Immediately begin playback of the specified stream,

and replace current and enqueued streams.

"""

# type: (str, int, str, Dict, ResponseFactory) -> Response

if card_data:

response_builder.set_card(

StandardCard(

title=card_data["title"], text=card_data["text"],

image=Image(

small_image_url=card_data["small_image_url"],

large_image_url=card_data["large_image_url"])

)

)

# Using URL as token as they are all unique

response_builder.add_directive(

PlayDirective(

play_behavior=PlayBehavior.REPLACE_ALL,

audio_item=AudioItem(

stream=Stream(

token=url,

url=url,

offset_in_milliseconds=offset,

expected_previous_token=None),

metadata=add_screen_background(card_data) if card_data else None

)

)

).set_should_end_session(True)

if text:

response_builder.speak(text)

return response_builder.response

def stop(text, response_builder):

"""Issue stop directive to stop the audio.

Issuing AudioPlayer.Stop directive to stop the audio.

Attributes already stored when AudioPlayer.Stopped request received.

"""

# type: (str, ResponseFactory) -> Response

response_builder.add_directive(StopDirective())

if text:

response_builder.speak(text)

return response_builder.responseThe play and stop functions will run when Alexa play/stop intents are invoked. It will take as 'url' parameter the data returned from the search_track function in our ytdl/util.py. Also note that the alexa/util.py imports data from data.py to make alexa display thumbnail on supported device as seen in the card_data and add_screen_background functions.

All good. Now let's build the Flask app that for our webserver.

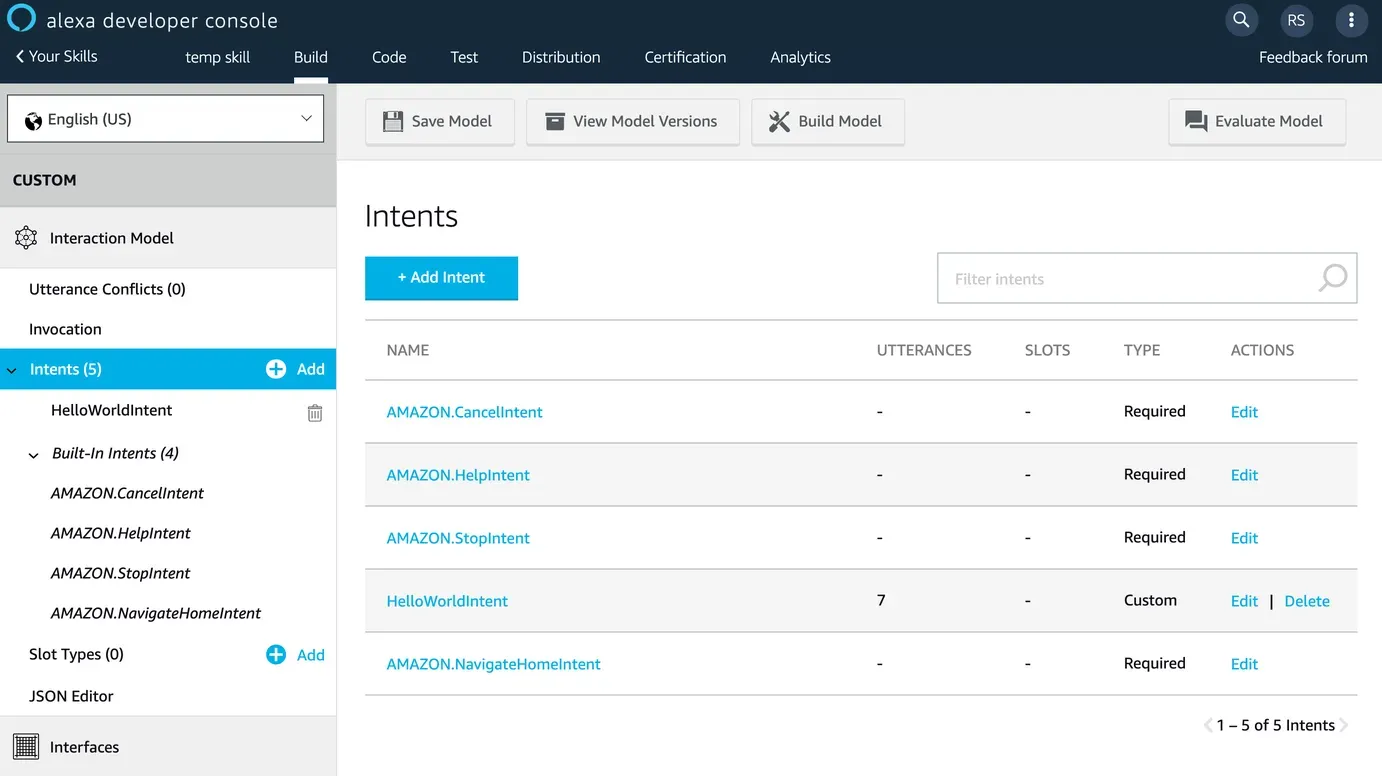

Create an app.py file in the root directory of our project folder ytskill. We will need to tell Alexa what to do when we issue commands. These are called Intents, i.e are what a user is trying to accomplish. The intents we are concerned here are : Launch, PlayAudio, CancelorStop, Help, and PlaybackFinished intents. We'll need to write code to execute when these intents are invoked.

import logging

import gettext

import os

from flask import Flask

from ask_sdk_core.skill_builder import SkillBuilder

from flask_ask_sdk.skill_adapter import SkillAdapter

from ask_sdk_core.dispatch_components import (

AbstractRequestHandler, AbstractExceptionHandler,

AbstractRequestInterceptor, AbstractResponseInterceptor)

from ask_sdk_core.utils import is_request_type, is_intent_name

#from ask_sdk_core.handler_input import HandlerInput

from ask_sdk_model import Response

from alexa import data, util

from ytdl.util import search_track

app = Flask(__name__)

sb = SkillBuilder()

logger = logging.getLogger(__name__)

logger.setLevel(logging.DEBUG)

# ######################### INTENT HANDLERS #########################

# Handlers for the built-in intents and generic

# request handlers like launch, session end, skill events etc.

class LaunchRequestHandler(AbstractRequestHandler):

"""Handler for generic launch intent"""

def can_handle(self,handler_input):

# type: (HandlerInput) -> bool

return is_request_type("LaunchRequest")(handler_input)

def handle(self, handler_input):

# type: (HandlerInput) -> Response

logger.info("In LaunchRequestHandler")

handler_input.response_builder.speak(data.WELCOME_MSG).set_should_end_session(False)

return handler_input.response_builder.response

class PlayAudioHandler(AbstractRequestHandler):

"""Handler for PlayAudio intent."""

def can_handle(self, handler_input):

# type: (HandlerInput) -> bool

return is_intent_name("PlayAudioIntent")(handler_input)

def handle(self, handler_input):

query = handler_input.request_envelope.request.intent.slots[

"query"].value.lower()

track = search_track(query, audio_format=data.AUDIO_FORMAT)

# type: (HandlerInput) -> Response

logger.info("In PlayAudioHandler")

return util.play(url=track['url'],

offset=0,

text=data.NOW_PLAYING_MSG.format(track['title']),

card_data=util.card_data(title=track['title'], image=track['image']),

response_builder=handler_input.response_builder)

class CancelOrStopIntentHandler(AbstractRequestHandler):

"""Handler for generic cancel, stop or pause intents."""

def can_handle(self, handler_input):

# type: (HandlerInput) -> bool

return (is_intent_name("AMAZON.CancelIntent")(handler_input) or

is_intent_name("AMAZON.StopIntent")(handler_input) or

is_intent_name("AMAZON.PauseIntent")(handler_input))

def handle(self, handler_input):

# type: (HandlerInput) -> Response

logger.info("In CancelOrStopIntentHandler")

return util.stop(data.STOP_MSG, handler_input.response_builder)

class HelpIntentHandler(AbstractRequestHandler):

"""Handler for providing help information."""

def can_handle(self, handler_input):

# type: (HandlerInput) -> bool

return is_intent_name("AMAZON.HelpIntent")(handler_input)

def handle(self, handler_input):

# type: (HandlerInput) -> Response

logger.info("In HelpIntentHandler")

handler_input.response_builder.speak(data.HELP_MSG).set_should_end_session(False)

return handler_input.response_builder.response

class PlaybackFinishedHandler(AbstractRequestHandler):

"""AudioPlayer.PlaybackFinished Directive received.

Confirming that the requested audio file completed playing.

Do not send any specific response.

"""

def can_handle(self, handler_input):

# type: (HandlerInput) -> bool

return is_request_type("AudioPlayer.PlaybackFinished")(handler_input)

def handle(self, handler_input):

# type: (HandlerInput) -> Response

logger.info("In PlaybackFinishedHandler")

logger.info("Playback finished")

return handler_input.response_builder.response

# ################## TO DO #############################

#Implement other Intents

# ################## EXCEPTION HANDLERS #############################

class CatchAllExceptionHandler(AbstractExceptionHandler):

"""Catch all exception handler, log exception and

respond with custom message.

"""

def can_handle(self, handler_input, exception):

# type: (HandlerInput, Exception) -> bool

return True

def handle(self, handler_input, exception):

# type: (HandlerInput, Exception) -> Response

logger.info("In CatchAllExceptionHandler")

logger.error(exception, exc_info=True)

handler_input.response_builder.speak(data.UNHANDLED_MSG).ask(

data.HELP_MSG)

return handler_input.response_builder.response

# ###################################################################

sb.add_request_handler(LaunchRequestHandler())

sb.add_request_handler(PlayAudioHandler())

sb.add_request_handler(CancelOrStopIntentHandler())

sb.add_request_handler(PlaybackFinishedHandler())

sb.add_request_handler(HelpIntentHandler())

# Exception handlers

sb.add_exception_handler(CatchAllExceptionHandler())

skill_response = SkillAdapter(

skill=sb.create(),

skill_id='amzn1.ask.skill.0bc37a08-c240-42e7-b60d-e81ef23cf3eb',

app=app, verifiers=[])

skill_response.register(app=app, route="/") # Route API calls to / towards skill response

app.run(debug=True)

All done. Now we need to deploy the webserver. I'd advise to take a look at this tutorial, which uses nginx, gunicorn and supervisor.

Next sign in our Amazon developer console and click on the Create Skill button to get started. On the next page, enter the Skill name, e.g Youtube Player. This will be the invocation name, i.e the phrase a user will speak to start using your Alexa skill.

We then need to choose a model to add to your skill. These models are like templates that have been pre-designed by the Amazon team based on some common use cases. But we'll specify our own, so we'll select the Custom model and edit them in the JSON Editor.

This is the model template we need to paste in the JSON Editor.

{

"interactionModel": {

"languageModel": {

"invocationName": "Youtube player",

"intents": [

{

"name": "PlayAudioIntent",

"slots": [

{

"name": "query",

"type": "AMAZON.SearchQuery"

}

],

"samples": [

"play music by {query}",

"play songs by {query}",

"play videos by {query}",

"search for {query}",

"Play {query}"

]

},

{

"name": "AMAZON.PauseIntent",

"samples": []

},

{

"name": "AMAZON.ResumeIntent",

"samples": []

},

{

"name": "AMAZON.StopIntent",

"samples": []

},

{

"name": "AMAZON.CancelIntent",

"samples": []

},

{

"name": "AMAZON.StartOverIntent",

"samples": []

},

{

"name": "AMAZON.FallbackIntent",

"samples": []

}

],

"types": []

}

}

}

We have specified a few invocations phrases to launch the skill. Like :

Alexa,

"play music by {query}",

"play songs by {query}",

"play videos by {query}",

"search for {query}",

"Play {query}"

Finally, you need specify the backend endpoints of our Alexa skill. That is the url of our Flask webserver.

Now we build our skill but won't publish, and it should appear in our Alexa app.

You can find a repository of the code here.